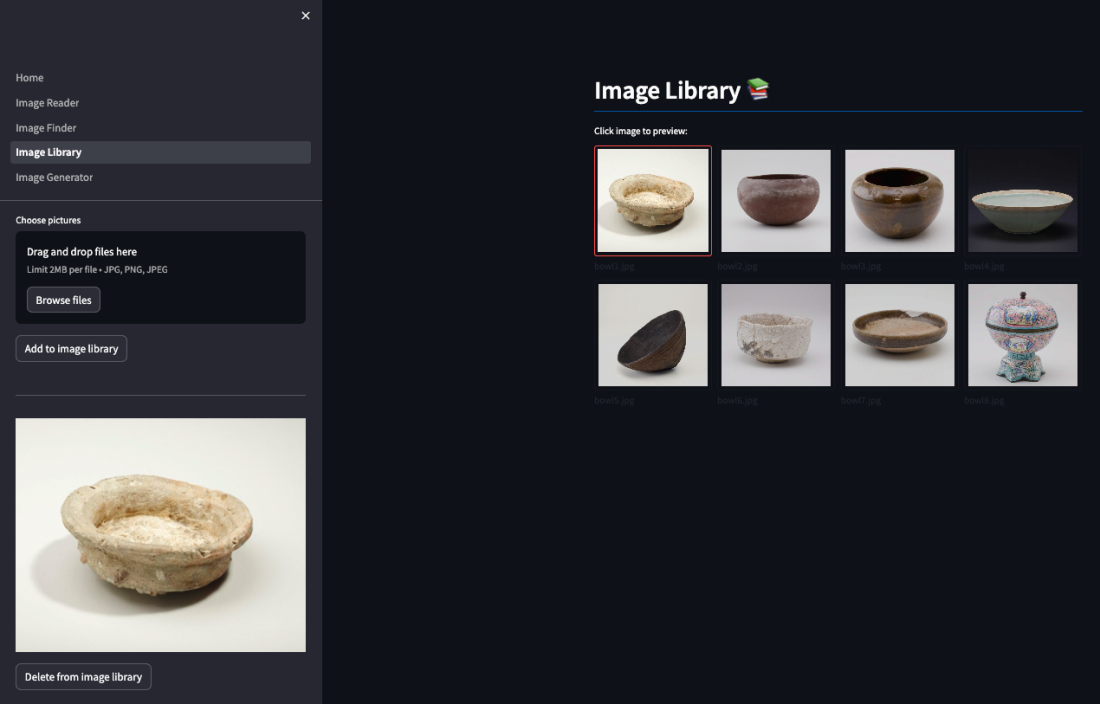

In our ever-evolving digital landscape, managing and organising vast collections of images can be a daunting task. However, with the advent of Generative AI (GenAI), we now have the ability to revolutionise the way we interact with and search for images. In this blog, we'll explore how to harness the power of GenAI to build … Continue reading Unleashing the Power of Generative AI: Building a Smart Image Library

Prompt Engineering with Claude 3 Haiku

10 days after Anthropic's Claude 3 Sonnet landed in Amazon Bedrock, Claude 3 Haiku Model is now available on Amazon Bedrock as well. Haiku is one of the most affordable and fastest options on the market for its intelligence category. As you can see in the following table, Haiku's pricing is very competitive in the … Continue reading Prompt Engineering with Claude 3 Haiku

Image-Reader: A project to explore Claude 3 Vision Capabilities

A week ago, AWS announced that Anthropic's Claude 3 Sonnet model now available on Amazon Bedrock. I am so eager to give it a try, especially its vision capabilities, as it is the first multimodal foundation model in Amazon Bedrock excluding embedding models. According to Anthropic's introduction, the Claude 3 family is smarter, faster and … Continue reading Image-Reader: A project to explore Claude 3 Vision Capabilities

Increase max input length for HuggingFace model in SageMaker deployment

I deployed HuggingFace zephyr-7b-beta model to SageMaker by using the default deploy.py script. When trying to invoke the model endpoint, I received the error "ValueError: Error raised by inference endpoint: An error occurred (ModelError) when calling the InvokeEndpoint operation: Received client error (422) from primary with message "{"error":"Input validation error: inputs must have less than … Continue reading Increase max input length for HuggingFace model in SageMaker deployment

Use Amazon Q with CodeWhisperer

Today I would like to share how to use Amazon Q with CodeWhisperer. The use cases can be self-learning, code review, bug fixing ... I previously wrote a blog of how to use CodeWhisperer to improve developer's productivity. If you missed it, here you go - "Use Amazon CodeWhisperer for free". In that blog, I explained … Continue reading Use Amazon Q with CodeWhisperer